Fact Highlighting with HoT: Get Verifiable, Trustworthy AI Results

TL;DR

HoT (Highlight-of-Thought) tells your AI to use only the facts you pass in and visibly highlight every name, number, date, ID and URL in the output, so you can scan and verify in seconds and stop hallucinations at source; it fits neatly into Make.com, Power Automate or n8n and your Microsoft stack (SharePoint, Teams) for invoice reminders, daily ops posts and lead triage - if a field is missing, the model marks [MISSING] instead of guessing; my rule when money or promises are involved is that every figure and date must be highlighted; start by adding a short HoT prompt to one flow, test edge cases, and add a quick “highlight scan” before anything goes out.

Blog Outline

- What is the HoT Method (Highlight-of-Thought)?

- Why Do AI “Hallucinations” Happen, and Why Is It a Problem?

- How HoT Helps Get Verifiable, Trustworthy AI Outputs

- HoT in Action: Practical Example for Businesses

- Practical examples in Make.com, Power Automate, and n8n

- Implementation guide (step-by-step)

- Bringing HoT into Your Workflow (Next Steps)

Introduction:

Have you ever asked an AI assistant a question and gotten an answer that sounded confident – but turned out to be nonsense? If so, you’re not alone. This kind of AI blunder is often called a “hallucination,” where the system makes up information that isn’t true. For a small business owner, an AI’s confident but incorrect answer isn’t just annoying – it can cause real problems. Imagine a chatbot cheerfully telling a client the wrong invoice amount or confirming an appointment that doesn’t exist – not good!

I’ve encountered this firsthand – I once saw an AI-generated sales report that was way off the mark, essentially inventing data. That was a wake-up call. How do we trust AI outputs if we can’t tell what’s real? As someone who helps SMEs modernise with AI and automation, I know that trust and accuracy are everything. We need AI results we can verify quickly, without playing detective for each detail.

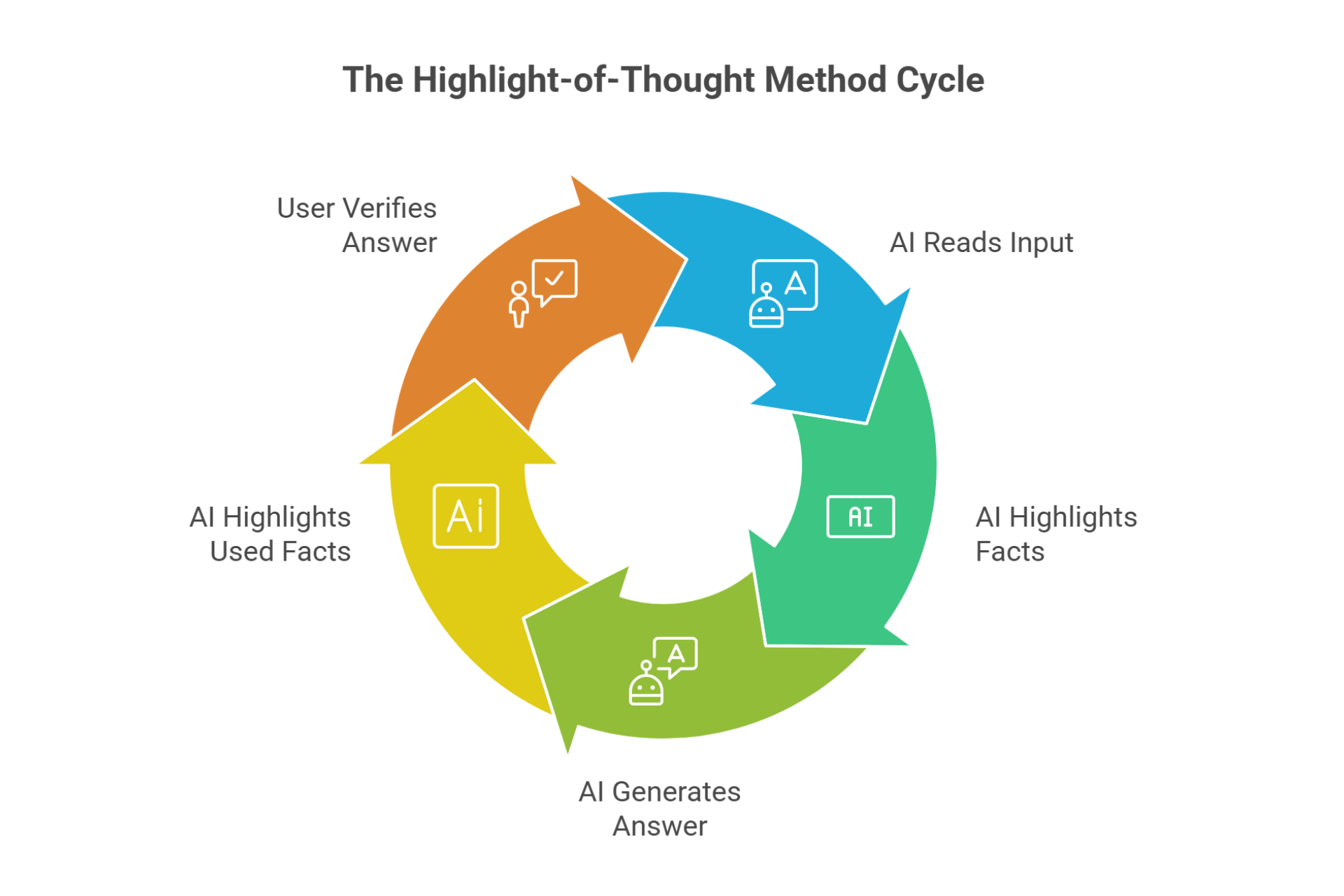

That’s why I’m excited about the HoT method, which stands for Highlight-of-Thought. This clever prompting technique basically forces your AI to cite, highlight, and rely on real facts when answering. In plain language, HoT makes the AI show how it knows what it knows. Instead of giving you a black-box answer, the AI will highlight the factual bits so you can check them. This way, answers become far more trustworthy and easy to verify.

What is the HoT Method (Highlight-of-Thought)?

The Highlight-of-Thought (HoT) method is a prompting strategy that gets an AI to highlight the key facts it uses in its response. Think of it like asking the AI to use a highlighter pen on the important parts of its answer. Just as you might highlight key facts in a report, the AI highlights the sources of its answer – so you can instantly see what it relied on.

How does this work in practice? With HoT prompting, you actually instruct the AI in a special way. First, the AI will re-read your question or input and mark up the crucial facts or details (essentially identifying “this is important”). Then, it generates its answer, and whenever it uses those important facts, it highlights them in the output. The final answer you see has certain words or numbers highlighted, pointing back to your original query or data. It’s like the AI is saying, “Here’s exactly where I got this info from.” By doing so, the AI is showing its work, and you can verify each claim at a glance.

A quick example: say you ask, “Our revenue was €500k in 2017 and €750k in 2022 – what’s the growth percentage?” A HoT-enabled AI might respond, “Your revenue grew by 50% (from €500k to €750k).” The bold text indicates highlights. You can immediately see it used the €500k and €750k from your question, so you know the answer is based on those real numbers (and you can double-check the math yourself). If the AI tried to include a number not in your question, that number wouldn’t be highlighted – a red flag that it might be making something up.

This method was introduced by AI researchers as Highlighted Chain-of-Thought (HoT) prompting, specifically to combat the tendency of AIs to hallucinate. By grounding the AI’s response in the facts from the query (and making that grounding visible), HoT makes it much harder for the model to go off-script and invent things. Essentially, it tells the AI: “Stick to the facts I gave you, and make it obvious which facts you’re using.”

Not only does this approach yield more accurate answers, it also makes life easier for us humans. The highlights act like a map, letting us trace every important statement back to a source. In fact, tests found that people could verify answers more accurately and about 15 seconds faster when the answers had highlights. That’s a big deal when you’re a busy business owner trying to get quick, correct information. Instead of guessing whether an AI’s answer is right, you can see evidence of the facts and trust the output more.

Here’s a visual of HoT in action. In the image below, the AI’s answer on the right uses HoT highlighting, while the answer on the left is a normal response. You can see how the HoT version highlights key facts (in this case, the years 2017 and 2042) in both the question and the answer. This makes it super clear how the AI arrived at its result - you can literally see the AI’s train of thought.

An example of the HoT method: the AI highlights key facts (years in this case) in the question and answer, making it easy to trace the answer back to the question.

By having the AI highlight the factual building blocks of its answer, HoT provides instant transparency. It transforms the AI from a mysterious “oracle” into a more helpful assistant who shows you its references. For anyone who’s ever been uneasy about whether an AI-generated email or report is pulling figures out of thin air, HoT offers immediate peace of mind. You don’t have to simply trust the AI – you can verify what it’s saying quickly and easily.

Why Do AI “Hallucinations” Happen, and Why Is It a Problem?

AI "hallucination" is when the system makes up information that isn’t true – often very convincingly. It happens because these models don’t actually know facts; they predict words based on patterns. If an AI doesn’t have reliable info, it might confidently fill the gap with something that sounds plausible (essentially, it guesses). And since AI is great at sounding authoritative, those made-up answers can look legit. For instance, Google’s AI once bizarrely claimed geologists recommend eating one rock per day – a total fiction, but it sounded plausible in context.

This might be amusing in a trivial setting, but in business it’s dangerous. A hallucinating AI can mislead your customers or staff, cause bad decisions, or even land you in legal trouble. (One airline’s chatbot, for example, gave out such incorrect info that it ended up in a dispute with a customer.) For Irish businesses, trust is everything – you can’t afford an AI helper that occasionally spews nonsense. If your automated system emails a client the wrong invoice amount or promises a service you never offered, the small business automation dream quickly turns into a nightmare of apologies and damage control.

That's why trustworthy AI prompts are crucial. We all want the efficiency of AI, but we also need to trust its outputs. So how do we get AI that doesn’t hallucinate and stays verifiable? Enter the HoT method...

How HoT Helps Get Verifiable, Trustworthy AI Outputs

So, how does HoT actually help fix the hallucination problem and make AI outputs more reliable? The magic lies in grounding the AI in real information and being transparent about it. Here are a few big benefits of using HoT:

- AI sticks to the facts you give it: With HoT-style prompting, you’re basically telling the AI, “Don’t introduce any new facts that I didn’t provide. Use the info I gave you, and highlight exactly what you used.” This drastically lowers the chance of the AI going off on a frolic of its own. If the AI can’t point to a source for a statement, it’s far less likely to include that statement. It’s like enforcing a rule: no evidence, no inclusion. HoT serves as a strict teacher reminding the AI to show evidence for every claim.

- Instant fact-checking for you: When key points are highlighted, you can verify details at a glance. Did the AI pull the correct client name, the right date, the accurate number from your database? If those pieces are highlighted, you immediately see them and can cross-check with your source. If something that should be highlighted isn’t, that’s a clue the AI might have thrown in an extra tidbit that wasn’t provided – a cue to be sceptical. This built-in transparency means you spend less time worrying and double-checking

- Easier to read and trust: HoT highlights make answers more skimmable and user-friendly. Important facts stand out in colour, which naturally draws your attention to the evidence. Many of us highlight text to make it easier to study or review – this is the same idea. Research actually showed that people find highlighted AI answers quicker to read and verify. It just feels more trustworthy when you see the proof in front of you.

- Encourages better AI behaviour: By training the AI to highlight and cite facts, we nudge it into a more truthful mindset. It’s similar to asking a student to show their work; they become more careful. An AI that knows it must highlight its sources is less likely to take wild leaps or use dubious info. In essence, HoT adds a layer of self-checking. (It’s not foolproof – if the AI misidentifies which facts are needed, it could highlight the wrong thing confidently. But overall, it significantly reduces nonsense.)

To really see the difference, let’s compare traditional AI output vs. HoT output side by side:

| Aspect | Traditional AI Output (no HoT) | HoT-Enabled AI Output |

|---|---|---|

| Sticking to facts | May omit or alter facts; might even insert details not provided. | Stays on script – only uses facts you gave, and highlights each one used. |

| Finding errors | You have to manually cross-check the entire answer to catch mistakes. | Highlights act as flags – if a detail isn’t highlighted, you know it wasn’t in your input and might be invented. |

| User confidence | Can be low – you’re unsure which parts to trust without verification. | High – you see evidence for each key point, boosting your confidence in the answer. |

| Verification time | Longer – you might spend a while checking if each piece of info is correct. | Shorter – you can scan the highlights and quickly verify against your data. |

| Risk of hallucination | Higher – the AI might stray and add unsupported info. | Lower – the AI is constrained to highlighted facts, greatly reducing made-up content. |

As you can see, HoT can turn an AI’s answer from a mysterious monologue into a transparent, evidence-backed response. It’s like the difference between an employee who makes claims off the top of their head versus one who cites a file or email for every claim. Naturally, you’d trust the one providing sources more.

Now, a quick reality check: HoT doesn’t make an AI infallible. If your AI is confused or the input data itself is wrong, the AI’s answer could still be wrong – it’ll just be wrong with highlights. In fact, one study found that when an AI does make a mistake, having highlights can sometimes trick people into trusting the incorrect answer more (because it looks so well-supported). So HoT isn’t a license to turn your brain off; think of it as a helpful assistant, not a guarantee of truth. You still need to keep a critical eye on important outputs. That said, for everyday business use where we want to minimize errors and eliminate obvious hallucinations, HoT is a huge improvement in making AI a reliable partner rather than a wildcard.

Overall, HoT aligns AI outputs with the verifiable facts you provide and presents answers in a way that’s easy to trust and double-check. It flips the script from “just trust me” to “here’s why you can trust me.” And when you integrate HoT into your workflows, you get the benefits of AI speed without the constant worry about accuracy.

HoT in Action: Practical Example for Businesses

Let’s look at a quick example of HoT in action for a small business automation scenario:

Example: Error-Free Invoicing Automation

Scenario: You use a CRM or accounting software to manage client billing. Every month, you have an automated workflow (maybe a Power Automate flow or a Make.com scenario) that drafts invoice summary emails to clients. It uses an AI service to generate a friendly message: what work was done, the amount due, the due date, etc., pulling data from your system.

The usual way (without HoT): The AI takes the data and composes an email like, “Dear John, your total for March is €1,200. Please pay by April 15. Thank you for your business.” That sounds fine – but how do you know the AI didn’t mix up John’s amount with another client’s, or accidentally use last month’s figures? If there was a glitch in the input, the AI’s email might say €1,200 when the actual invoice is €1,100. You might not catch that before it goes out. John could be confused or upset, and now you have to apologise and fix the error. Not exactly the efficiency you hoped for.

With HoT method applied: We tweak the AI’s prompt to use Highlight-of-Thought. Now, when the AI drafts the email, it highlights the key facts it used: “Dear John, your total for March is €1,200. Please pay by April 15. Thank you for your business.” Before sending this email, you (or your staff) take a quick glance. You see €1,200 is highlighted – you cross-check that with the invoice total in your system. April 15 is highlighted – you confirm that’s the correct due date on record. Everything matches up. You send it off with confidence. If something were off, it would jump out at you: for example, if the email said €1,200 but your system shows €1,100, that discrepancy in highlight would scream for attention. In other words, HoT turns this into a quick verification step (a few seconds of eyeballing highlights) that can save you from costly billing mistakes. In fact, I helped a local design agency in Cork implement this, and it gave them great peace of mind (the owner said it was like having the figures double-underlined for them).

Even in this simple example, you can see HoT’s value. The business owner doesn’t have to trust blindly that the AI got it right – the highlighted facts provide immediate assurance that the email is based on the right data. It’s a safety net that barely costs any time to use, but it can prevent a ton of potential hassle.

Practical examples in Make.com, Power Automate, and n8n

Below are three common patterns I build for our clients. Each one uses HoT to keep the AI anchored to correct facts.

- Invoicing & accounts receivable reminder (Make.com or Power Automate)

Goal: Draft polite reminder emails with the right figures and dates, straight from your accounts system or CRM.

Flow:

- Trigger: Overdue invoice found (e.g., from Xero/QuickBooks/ERP via Make.com, or Dataverse/Excel/SharePoint via Power Automate).

- Collect facts: Client name, invoice number, amount due, due date, link to pay, account manager.

HoT prompt (summary):

- “Write a friendly reminder. Use only the facts provided and highlight each one in the email.”

- “If a fact is missing, leave a short note: [MISSING: …]. Do not invent details.”

Result with HoT:

“Hi

Murphy Electrical, just a reminder that invoice

INV-20314 for

€1,200 was due on

15 April 2025. You can pay here:

https://…. If you have any questions,

Aoife Byrne is happy to help.”

In seconds, a teammate checks the highlights against the source record. Send with confidence

2. SharePoint / Teams daily ops summary (Power Automate)

Goal: Post a clear morning update to a Teams channel based on a SharePoint list of jobs.

Flow:

- Trigger: 8:00 a.m. on weekdays.

- Collect facts: Job ID, client, location, assigned staff, time window, key risk notes, materials on hold.

HoT prompt (summary):

- “Summarise today’s jobs. Highlight Job ID, client, time window, location, and any risk.”

- “Do not add any jobs that are not in the list.”

Result with HoT:

“JOB-4721 -

Gallagher & Co -

09:00–11:00 -

Sandyford - Risk:

live traffic management.”

Teams can skim, trust, and go. If one highlight looks off, it gets fixed before crews roll out.

3. Lead qualification & CRM updates (n8n or Make.com)

Goal: When a form or email comes in, draft a qualification summary for the CRM and a reply to the lead.

Flow:

- Trigger: New submission in Webflow/Typeform/Outlook.

- Collect facts: Name, company, phone, email, services requested, budget range, timeline.

HoT prompt (summary):

- “Create a qualification summary using only the provided details. Highlight name, company, service, and timeline.”

- “If budget is missing, mark [MISSING].”

Result with HoT:

“Lead: Siobhán Daly, Harbour Kitchens, service: retail fit-out, timeline: June–July. Budget: [MISSING].”

Sales sees what’s solid and what needs a quick call, without guessing.

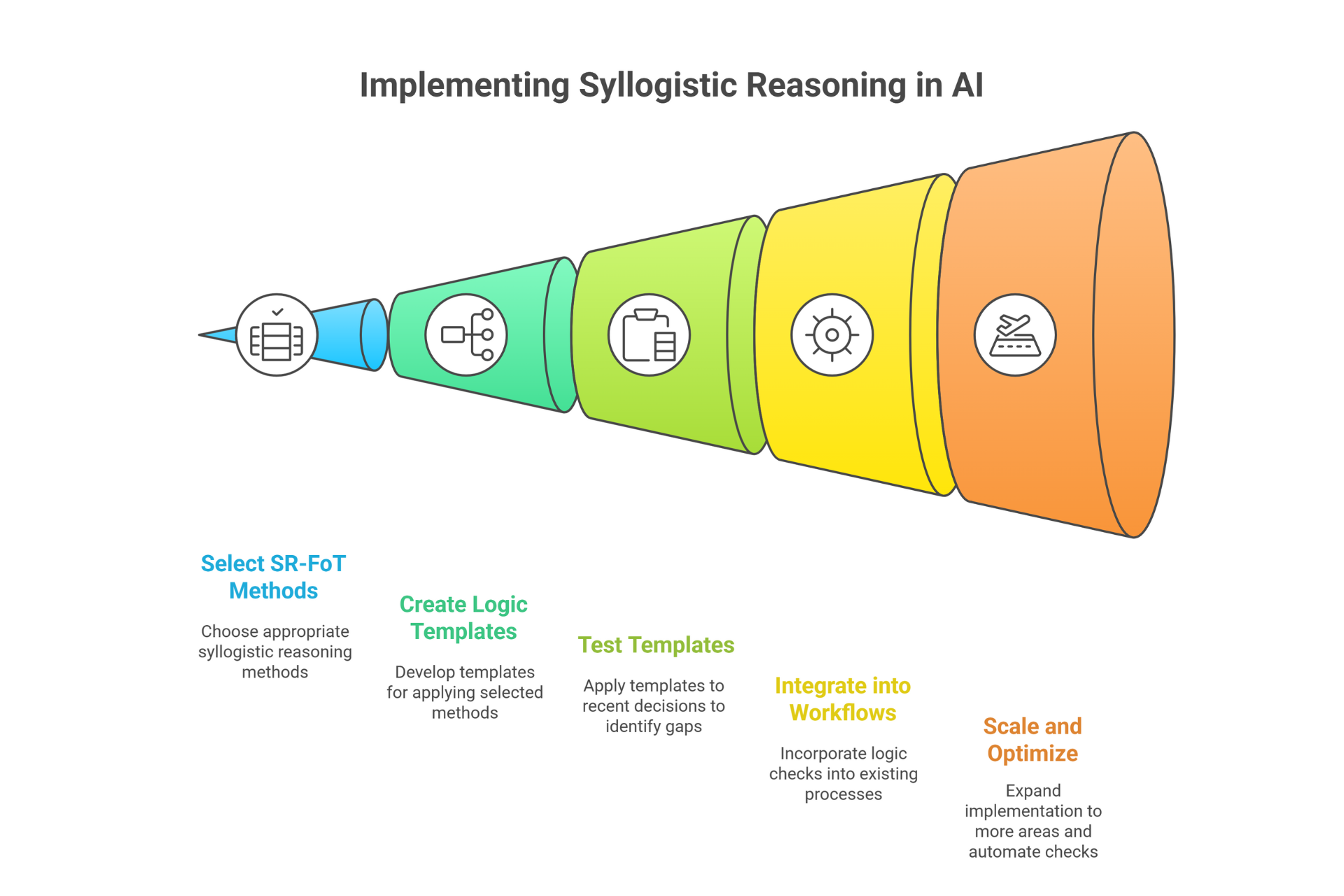

Implementation guide (step-by-step)

1

Pick one workflow

Choose a spot with repeated manual checking: invoice reminders, daily ops posts, or sales follow-ups. HoT shines where the same kinds of facts must be correct every time.

2

Define the facts

List the exact fields the AI is allowed to use: ClientName, InvoiceNumber, AmountDue, DueDate, PaymentURL, AccountManager. Use the same names in your prompt and pass them in cleanly from your tool (Make.com, Power Automate, n8n).

3

Add the HoT prompt

Paste one of the templates above into your AI step. If your system supports rich text, ask for bold on facts. If not, use [[FACT: …]].

4

Test with edge cases

Try: missing due date, strange characters in a name, very large amounts, multiple invoices, or time zones. Confirm the AI flags [MISSING] and doesn’t guess.

5

Set a “two-second check” rule

Before anything goes to a customer or a team channel, someone scans the highlights. If highlights look wrong or a key fact isn’t highlighted, you fix it right there.

6

Roll out and standardise

Once the first use case is steady, copy the pattern to the next. Keep a short internal standard like this:

- Always highlight: names, dates, numbers, URLs, IDs.

- Never invent: if in doubt, [MISSING].

- Escalate: if a critical fact is missing twice in a row, flag the upstream data source.

Bringing HoT into Your Workflow (Next Steps)

You might be eager to try HoT in your own workflows. Here are a few tips to get you started on the right foot:

- Start small, then iterate: Pick one AI-driven task and apply HoT prompting to it. Experiment and refine your approach based on results.

- Train your team & stay critical: Make sure your team knows to look for highlighted facts and still double-check crucial details. Highlights make verification easier, but they’re not a substitute for common sense.

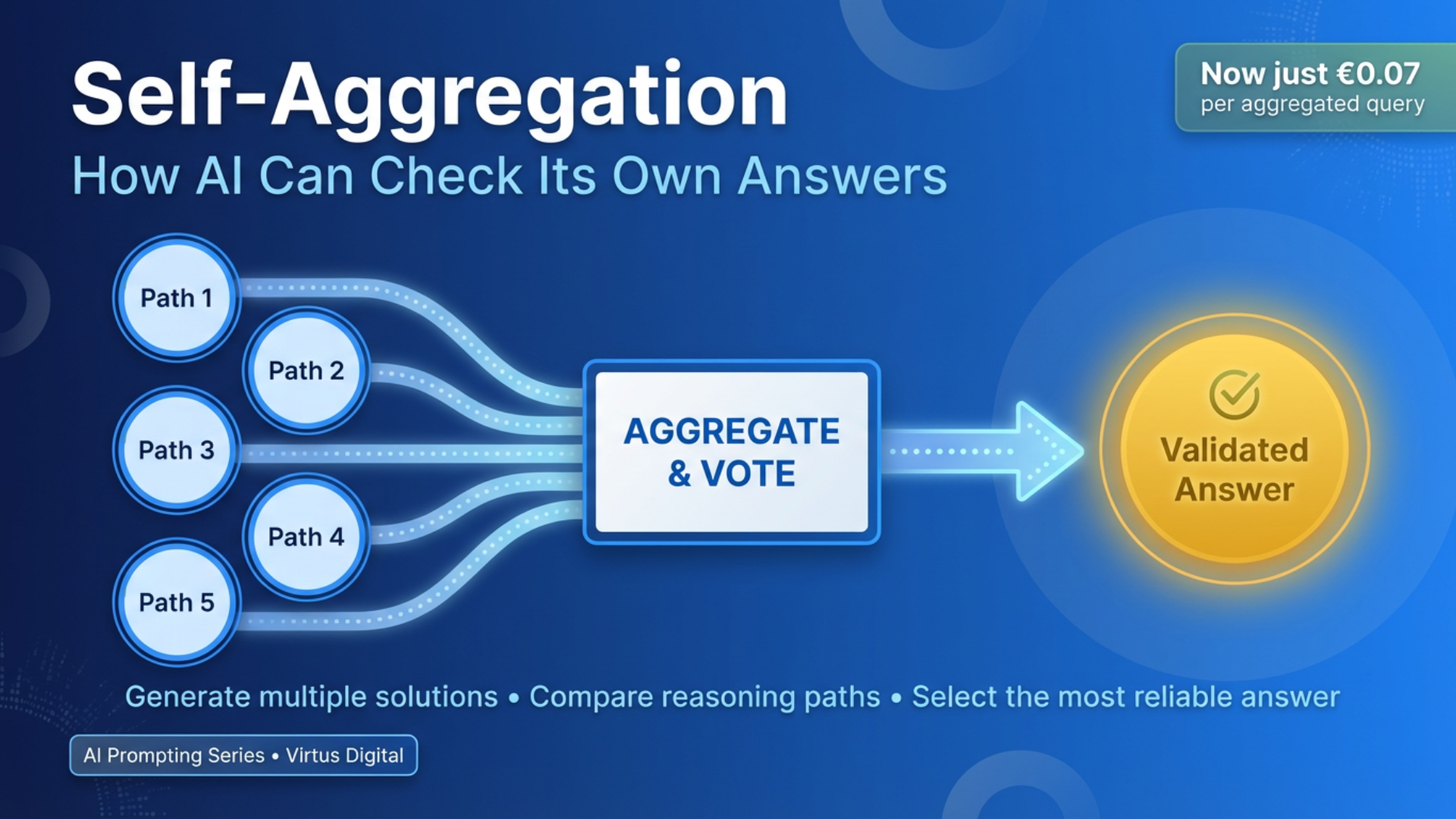

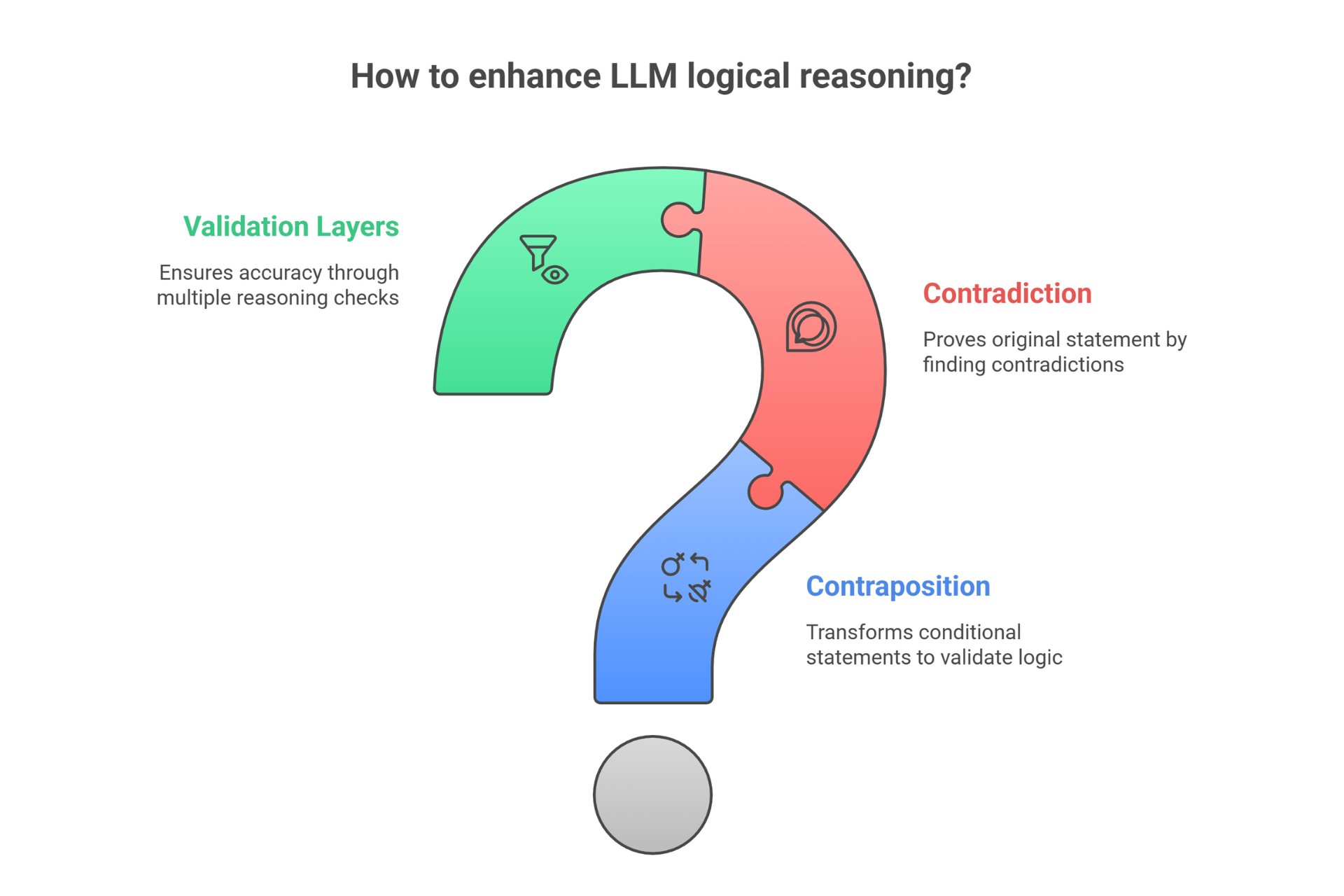

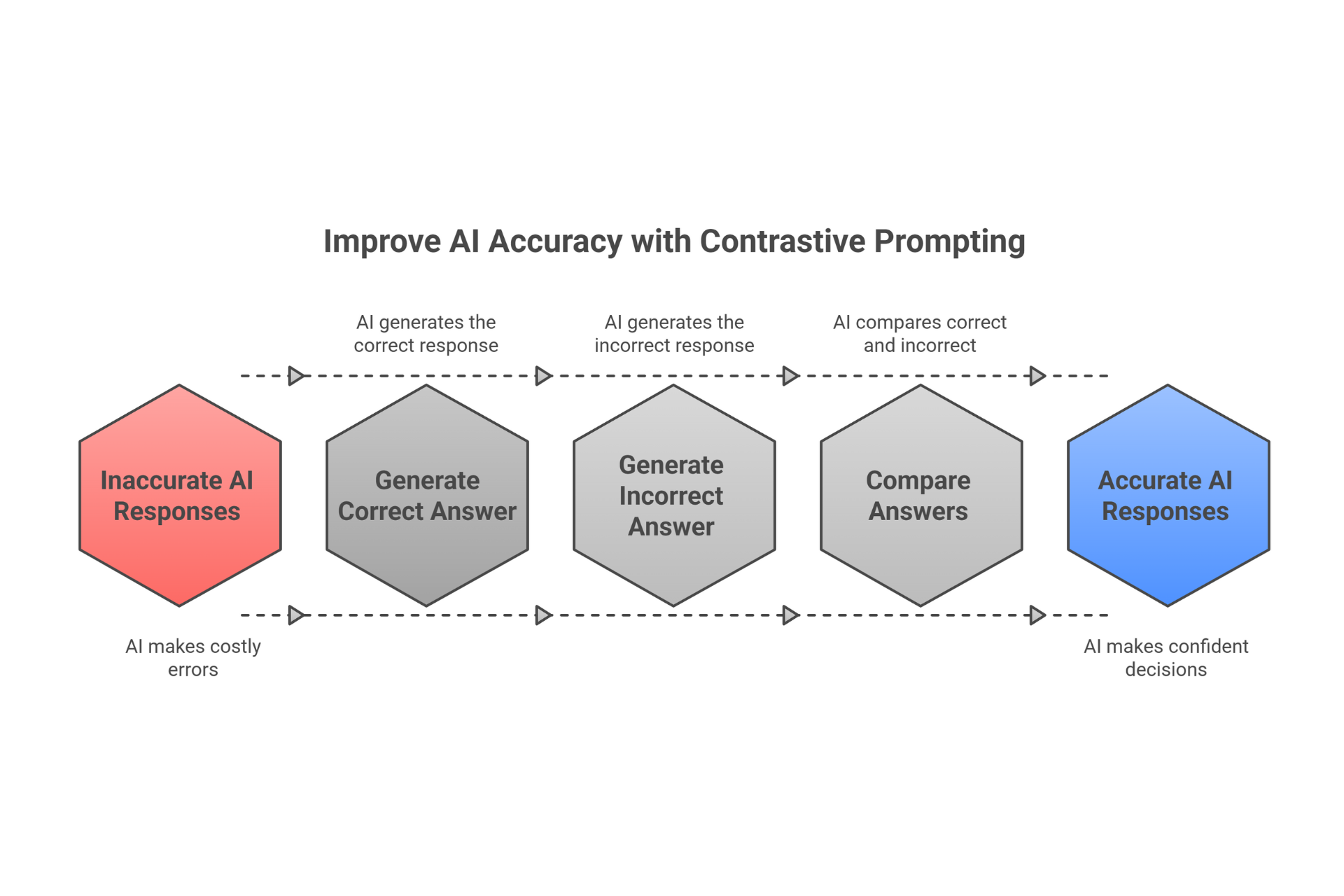

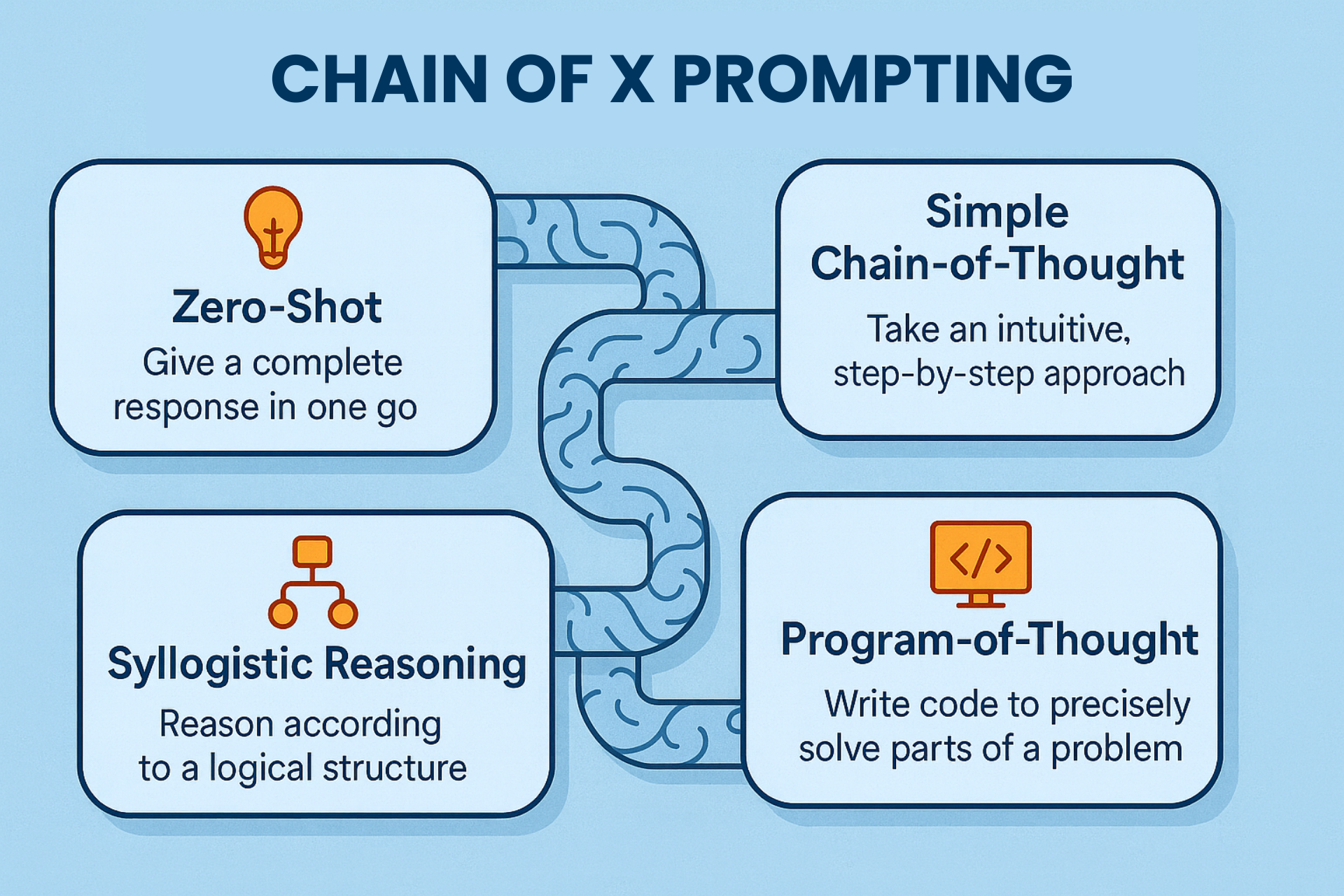

As AI prompting for business continues to evolve, techniques like HoT are going to be what separates useful AI solutions from novelty party tricks. At Virtus Digital, we’re dedicated to helping businesses get real results with AI – zero hallucinations, zero nonsense. The HoT method is just one of many advanced prompting strategies (as part of our AI Prompting Series) that can make AI a trustworthy partner in your work.

If you’re keen to make your small business automation smarter and more reliable, consider giving HoT a try. And if you need a hand implementing it – or want to explore other cutting-edge AI prompting techniques – we’re here to help. After all, the goal is to let AI handle the heavy lifting without giving you new problems to worry about. With approaches like Highlight-of-Thought, you can finally get AI outputs that you don’t have to second-guess, freeing you up to focus on running your business.

Key Takeaway: You don’t have to accept AI “hallucinations” as an inevitability. By using methods like HoT to highlight and verify facts, you can demand (and get) AI results that are trustworthy. It’s about working smarter with AI, not just working faster. So go ahead – shine a light on those AI answers, and enjoy the confidence of knowing the facts are solid.

Ready to explore more ways to make AI work for you? Keep an eye on our Virtus Digital AI Prompting Series for more insights, or get in touch with us to supercharge your automation with verifiable, reliable AI today.