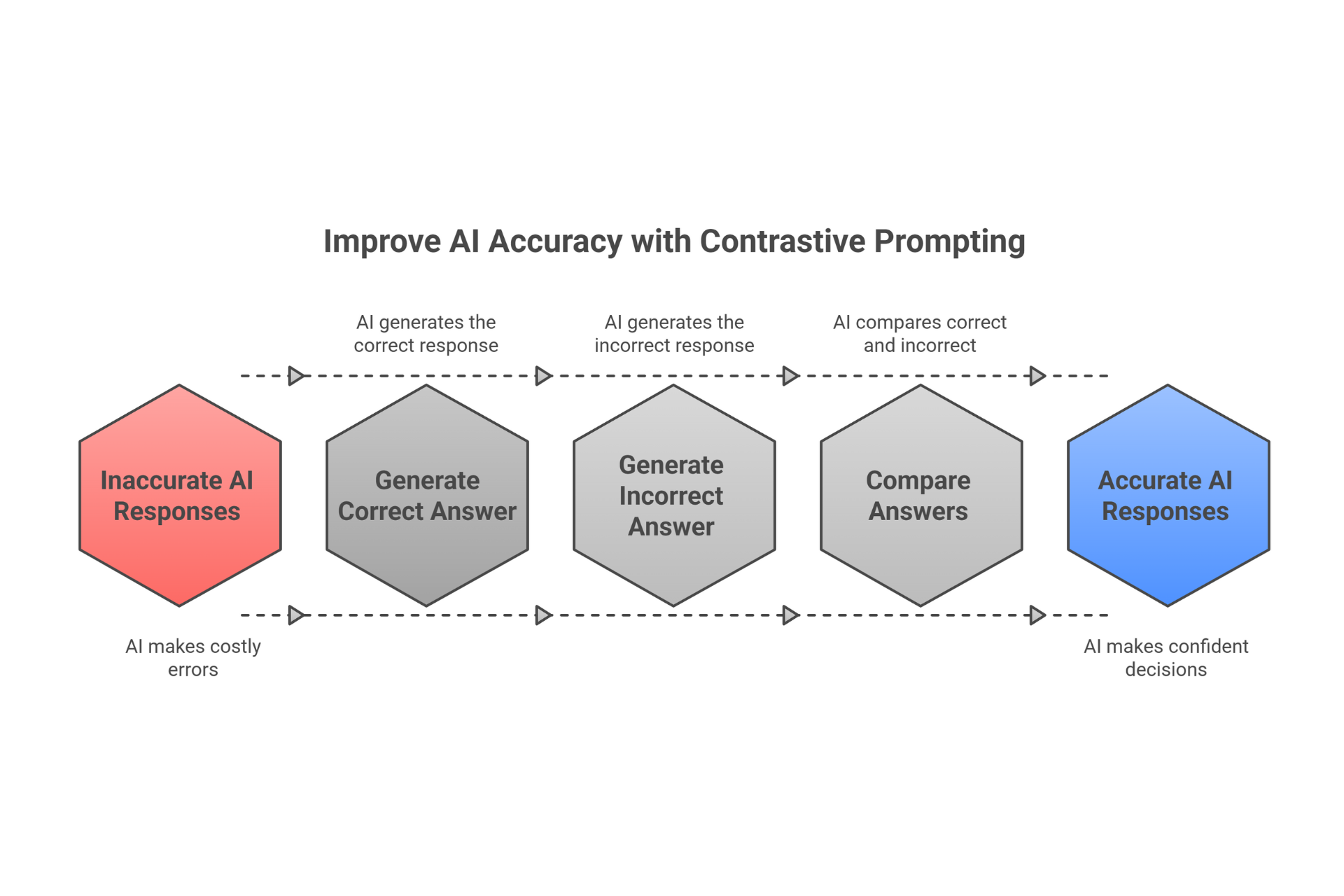

Contrastive Prompting: How Teaching AI Right vs Wrong Examples Boosts Accuracy by 10%

TL;DR

Contrastive prompting is dead simple yet powerful: ask your AI to generate BOTH correct and incorrect answers, then compare them. This forces critical thinking, explicitly identifies errors, and enables self-correction - boosting accuracy by 5-10% without any model retraining or extra computational cost. Just add "show me both the right and wrong approach" to your prompts and watch quality leap. Perfect for high-stakes decisions, complex reasoning, legal reviews, financial analysis, or any task where understanding failure modes matters as much as finding solutions. Implementation takes minutes; results are immediate.

Key Takeaway: Teaching AI what's wrong is just as powerful as teaching it what's right.

Blog Outline

- The Science: Why Your AI Thinks Better When Shown What NOT to Do

- How Contrastive Prompting Actually Works (Step-by-Step)

- Real Prompt Examples You Can Copy Today

- Contrastive Prompting vs Other AI Techniques

- Common Mistakes (And How to Avoid Them)

- When NOT to Use Contrastive Prompting

- FAQ: Your Questions Answered

Think of contrastive prompting as teaching your AI the way you'd train a new hire. You don't just show them the correct process – you also point out common mistakes and explain why they're mistakes.

The core concept: You explicitly ask your AI to generate both a correct response AND an incorrect response, then compare them before providing a final answer.

Why this is powerful: By forcing the AI to articulate what's wrong (not just what's right), you activate critical thinking mechanisms that otherwise stay dormant. The AI can't just pattern-match its way to an answer – it has to actually reason about the difference between good and bad approaches.

Research from multiple studies shows this technique improves accuracy by 5-10% in complex reasoning tasks. That might not sound massive, but in high-stakes business decisions, that 5-10% difference is the line between a brilliant recommendation and an expensive mistake.

Here's the beautiful part: implementation is dead simple. You literally just add one line to your prompts:

"Give me both a correct and an incorrect answer to this problem, then compare them."

That's it. No model retraining. No technical expertise required. No additional computational cost. Just smarter prompting.

I've been testing this at Virtus Digital for the past three months across client projects. The results? Consistently better outputs, fewer revision rounds, and – most importantly – clients who trust the AI-assisted recommendations because they can see the reasoning process.

The Science: Why Your AI Thinks Better When Shown What NOT to Do

Ever notice how the best teachers don't just explain correct answers? They also dissect common mistakes, showing exactly where students go wrong. Contrastive prompting brings this teaching method to AI.

Here's what happens under the hood:

1. Cognitive dissonance activation

When you force an AI to generate opposing answers, it creates internal tension. The model has to identify and explain contradictions, which triggers deeper analysis mechanisms. It's like asking someone to argue both sides of a debate – they end up understanding the issue far better.

2. Enhanced attention distribution

Standard prompting lets AI take the path of least resistance. Contrastive prompting forces it to consider multiple paths simultaneously. This means the AI tracks critical reasoning points more carefully throughout the response, catching potential errors it would otherwise miss.

3. Explicit error identification

Instead of simply avoiding mistakes, the AI actively identifies them in the "wrong" answer. This is massive. When you explicitly articulate what's wrong, you're less likely to accidentally include it in your final answer. It's the difference between "don't make mistakes" (vague) and "here's specifically what a mistake looks like" (concrete).

4. Self-correction mechanism

By comparing correct vs. incorrect approaches side-by-side, the AI essentially peer-reviews itself. This catches logical inconsistencies, unsupported assumptions, and reasoning gaps that single-path prompting misses entirely.

5. No additional cost

Unlike other advanced techniques that require more processing power or longer responses, contrastive prompting often uses fewer total tokens. Why? Because the AI becomes more focused and precise, eliminating unnecessary fluff.

A study on contrastive reasoning found it particularly effective for:

- Mathematical problems (8% accuracy improvement)

- Logical puzzles (7% improvement)

- Cause-and-effect analysis (6% improvement)

- Strategic business decisions (5% improvement)

For Irish businesses dealing with compliance, financial projections, or contract reviews – areas where errors are costly – that improvement isn't just nice-to-have. It's the difference between confident decisions and expensive corrections.

How Contrastive Prompting Actually Works (Step-by-Step)

Let's break down exactly how to implement contrastive prompting, from basic to advanced.

Basic Implementation (30 Seconds)

Take any existing prompt and add this line:

[Your original question/task]

Let's give both a correct and an incorrect answer to this problem.

Example:

Calculate the ROI on hiring a new salesperson at €45k salary who's projected to bring in €200k in new revenue with 25% margin. Let's give both a correct and an incorrect answer to this problem.

Structured Implementation (2 Minutes):

For better results, specify the format you want:

[Your task]

Provide:

CORRECT APPROACH:

[Detailed explanation of the right method]

INCORRECT APPROACH:

[Common mistake or wrong method]

COMPARISON:

[Why the correct approach works and the incorrect one fails]

FINAL ANSWER:

[Your recommendation based on the analysis]

Advanced Implementation (5 Minutes)

Combine with role-playing and domain expertise:

You are a [specific expert role with X years experience].

[Task/question with all relevant context]

Provide both a correct and incorrect approach:

- CORRECT SOLUTION (what an expert would do):

- Methodology

- Reasoning

- Expected outcomes

- Why this works

2. INCORRECT SOLUTION (what beginners/competitors typically do wrong):

- The flawed approach

- Why people make this mistake

- Consequences

- Real-world examples of this failing

3. SIDE-BY-SIDE COMPARISON:

Create a table comparing both approaches across:

- Accuracy

- Risk level

- Cost implications

- Time requirements

- Long-term impact

4. FINAL RECOMMENDATION:

Based on the analysis, provide your expert recommendation with specific action steps.

This structure works beautifully for complex business decisions where you need thorough analysis. I've used it for client strategy recommendations, and it consistently produces outputs that clients find more trustworthy because they can see both sides of the reasoning.

Real Prompt Examples You Can Copy Today

Marketing Strategy Validation

I'm launching a B2B SaaS product targeting Irish SME accountants.

Budget: €5k/month for 6 months.

Show me both the correct and incorrect marketing approach:

CORRECT STRATEGY:

- Target channels and why

- Message positioning

- Budget allocation

- Success metrics

- Timeline

INCORRECT STRATEGY:

- What B2B SaaS companies commonly do wrong in Ireland

- Why accountants ignore those approaches

- Money wasted

- Typical failure points

COMPARISON:

Create a table showing 6-month projected outcomes for both strategies.

RECOMMENDATION:

Final action plan that implements the correct strategy while

explicitly avoiding the identified pitfalls.

Why this works:

You get a strategy plus a warning system. The AI can't just regurgitate generic marketing advice – it has to actually think about what fails in this specific context (Irish market, SME accountants, limited budget).

Financial Decision Analysis

Scenario: Manufacturing company considering €200k equipment purchase.

Current production: 10k units/month at €5 margin per unit.

New equipment promises: 15k units/month production.

Financing: 5-year loan at 6.5% (Irish market rate).

Provide contrastive financial analysis:

SOUND ANALYSIS (correct):

- Break-even calculation

- Cash flow impact

- Risk factors specific to Irish manufacturing

- Hidden costs (maintenance, training, downtime)

- ROI timeline with conservative estimates

FLAWED ANALYSIS (common mistakes):

- What companies typically overlook

- Optimistic assumptions that don't hold

- Irish-specific regulatory costs missed

- Why these mistakes lead to buyer's remorse

COMPARISON:

Show 5-year projections for both approaches side-by-side.

FINAL RECOMMENDATION:

Should we buy? If yes, what protections/contingencies do we need?

Hiring Decision Logic

We're hiring a senior developer. Two candidates:

Candidate A: 10 years experience, wants €85k, available in 1 month

Candidate B: 4 years experience, wants €62k, available immediately

Key context:

- Critical project deadline in 6 weeks

- Team is overloaded (working 50+ hour weeks)

- Budget allows either candidate

Provide both correct and incorrect hiring reasoning:

CORRECT REASONING:

- Factors to consider beyond CV and cost

- Short-term vs. long-term impact

- Hidden costs of each choice

- Risk analysis

- Team dynamics implications

INCORRECT REASONING:

- Common shortcuts hiring managers take

- Why "cheaper/faster" often backfires

- Real costs of wrong hires in Irish market

- Typical blind spots

COMPARISON:

12-month cost and impact analysis for both choices.

RECOMMENDATION:

Who should we hire and why? Include onboarding strategy.

Why this is powerful:

Hiring decisions are emotional. Contrastive prompting forces you (and the AI) to examine b

Contract Risk Review

Review this client contract clause [paste clause].

Context: Irish digital services company, €120k project, 12-month timeline.

Provide contrastive legal risk analysis:

CORRECT INTERPRETATION:

- What this clause actually means

- Our obligations

- Client obligations

- Risk level: LOW/MEDIUM/HIGH

- Irish law considerations

INCORRECT INTERPRETATION:

- How companies often misread this type of clause

- Optimistic assumptions that lead to disputes

- Real examples of contracts with similar clauses going wrong

- Red flags that look minor but aren't

COMPARISON:

What happens if we interpret this correctly vs. incorrectly?

RECOMMENDATION:

Should we sign as-is, request changes, or walk away? Specific wording

suggestions if changes needed.

Reality check:

I cannot stress enough – always have an actual solicitor review important contracts. But this technique catches obvious issues *before* you spend money on legal review, and it helps you ask better questions when you do consult your solicitor.

Technical Architecture Decision

Deciding between:

Option A: Monolithic architecture on AWS (€800/month)

Option B: Microservices on AWS (€1,400/month)

System requirements:

- 50k daily users expected (growing to 200k in 2 years)

- 3 developers on team

- Compliance: GDPR, basic security

- Timeline: MVP in 4 months

Show both correct and incorrect technical reasoning:

CORRECT TECHNICAL DECISION:

- Architecture choice with justification

- Scaling strategy

- Team capability match

- Cost trajectory over 3 years

- Technical debt implications

- Irish/EU compliance considerations

INCORRECT TECHNICAL DECISION:

- Over-engineering or under-engineering pitfalls

- "Best practice" advice that doesn't fit our context

- Hidden costs of each choice

- Why startups fail with the wrong architecture

- Technical decisions that look smart but aren't

COMPARISON:

3-year total cost and risk analysis for both architectures.

RECOMMENDATION:

Which to choose, and what's our migration path if we need to switch later?

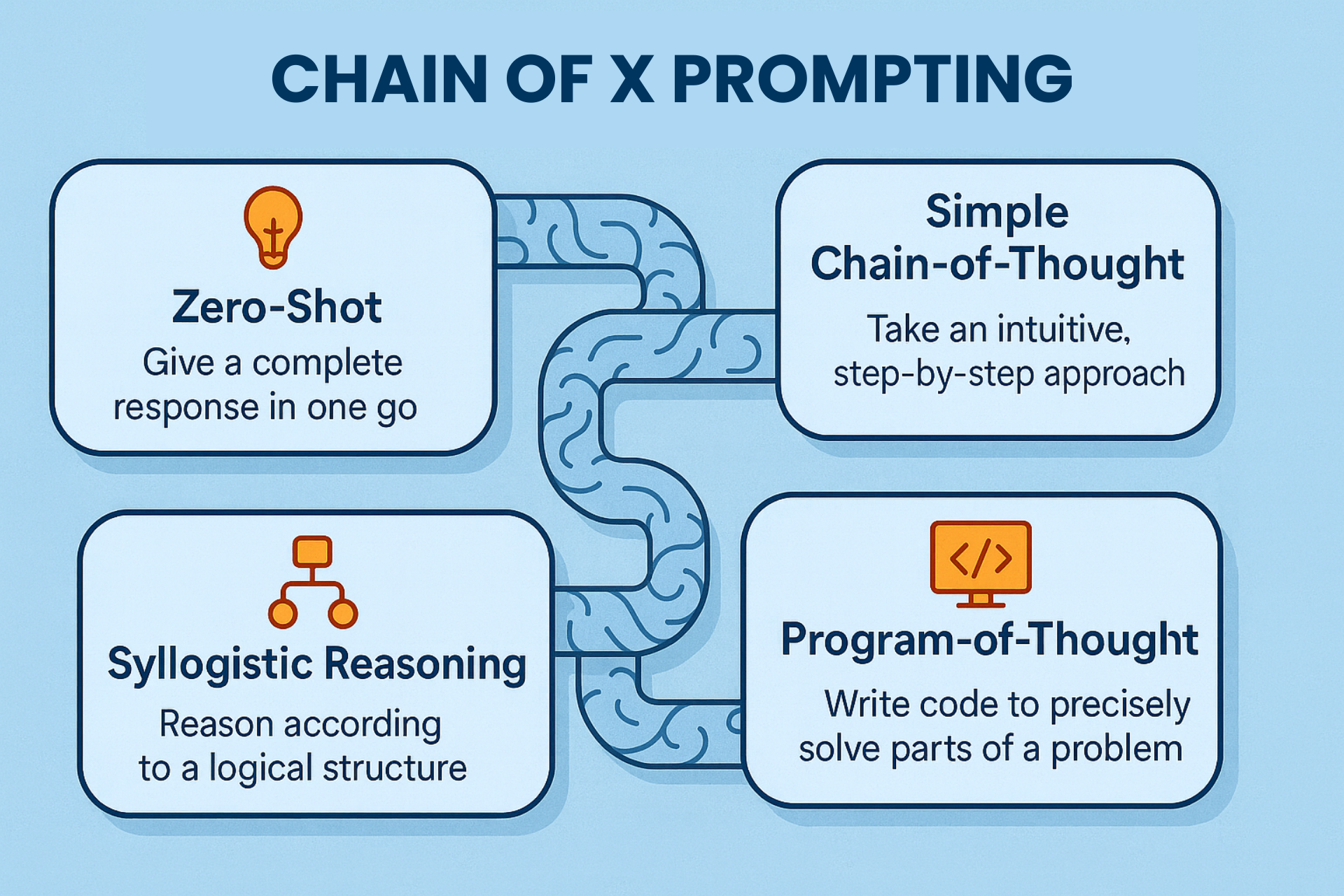

Contrastive Prompting vs Other AI Techniques

Let's be clear about when to use contrastive prompting versus other popular methods:

| Technique | Best for | Accuracy boost | Complexity | Token cost |

|---|---|---|---|---|

| Contrastive Prompting | High-stakes decisions; error-prone tasks; learning edge cases | 5–10% | Low | Neutral |

| Chain-of-Thought | Step-by-step reasoning, maths, logic puzzles | 3–8% | Low | +30% |

| Few-Shot Learning | Consistent formatting; pattern matching | 2–5% | Medium | +50% |

| Self-Critique | Quality control; post-generation review | 3–6% | Low | +40% |

Review quarterly: Are templates still relevant? Do they need updating for new regulations or market changes? Are there new high-value use cases?

Measuring Success: What to Track

Don't implement contrastive prompting blindly. Track concrete outcomes:

Accuracy Metrics

Before/After Comparison:

- Month 1: Establish baseline error rate in current decision process

- Months 2-4: Track error rate with contrastive prompting

- Target: 5-10% improvement (research-backed expectation)

Example:

A consulting firm tracked proposal accuracy:

- Before: 23% of proposals had scope/pricing issues flagged in review

- After: 9% had issues (61% reduction)

- Result: Fewer revision cycles, happier clients

Time Efficiency

Measure:

- Decision time: Does it take longer to make decisions? (Initially yes, but should normalise)

- Revision cycles: Fewer mistakes = fewer revisions

- Stakeholder questions: Better reasoning = fewer "why did we decide this?" questions

Reality check: You'll spend 2-3 extra minutes per decision initially. But you'll save 20-30 minutes per revision cycle avoided. Net positive by week 3-4.

Financial Impact

Track specific examples:

Cost avoidance:

- "Caught pricing error that would've cost €2.3k"

- "Identified compliance issue before submission"

- "Avoided hiring wrong candidate (estimated €15k+ in turnover costs)"

Revenue protection:

- "Prevented proposal that would've lost us the client"

- "Identified upsell opportunity we'd have missed"

Qualitative Benefits

Team confidence: Survey your team monthly:

- "How confident are you in AI-assisted decisions?" (1-10 scale)

- "How often do you second-guess AI outputs?" (Less = better)

Client trust: Track indirect signals:

- Fewer clarification questions on proposals

- Faster approval processes

- More repeat business

Common Mistakes (And How to Avoid Them)

Mistake #1: Using Contrastive Prompting for Everything

The problem:

Some decisions are too simple to benefit from contrastive analysis. "Should I schedule this meeting for Tuesday or Wednesday?" doesn't need a deep dive into incorrect scheduling approaches.

The fix:

Reserve contrastive prompting for:

- Decisions over €500 impact

- Complex, multi-factor choices

- High-risk areas (compliance, legal, financial)

- Learning situations (training new staff on decision-making)

Rule of thumb: If you can explain the decision in one sentence, skip the contrastive approach.

Mistake #2: Creating Strawman "Wrong" Answers

The problem:

Your prompt leads the AI to create ridiculous incorrect approaches that nobody would actually consider. "INCORRECT: Set all products on fire" isn't useful.

The fix:

Be specific about realistic mistakes:

Bad prompt:

“Show me the right and wrong way to price this product.”

Good prompt:

“Show me optimal pricing vs. common pricing mistakes Irish retailers

make (undercutting margin, ignoring VAT, matching competitors blindly,

overestimating volume).”

The incorrect approach should reflect real-world errors people actually make.

Mistake #3: Ignoring the "Wrong" Answer

The problem:

You ask for contrastive analysis, skim past the incorrect approach, and only read the correct one. This defeats the whole purpose.

The fix:

Actively engage with both answers:

1. Read the incorrect approach first

2. Check if it reflects mistakes you've made before

3. Look for blind spots in your current thinking

4. Use it as a checklist: "Are we accidentally doing any of this?"

The incorrect answer is often more valuable than the correct one because it's where you learn.

Mistake #4: No Follow-Up Questions

The problem:

You get one contrastive analysis and call it done, even if the AI's "incorrect approach" doesn't quite match reality or the "correct approach" has gaps.

The fix:

Treat it as a conversation:

“You mentioned [X] in the incorrect approach. Can you elaborate on why

this specific mistake happens and give me a real example of a company

that did this and failed?”

Or:

“The correct approach assumes [Y]. What if that assumption doesn't hold?

Show me a contrastive analysis for that scenario too.”

Iterate until the analysis feels robust.

Mistake #5: Not Adapting Templates to Your Context

The problem:

You copy-paste a generic template without adding your specific context (Irish market, your industry, your company's constraints).

The fix:

Always customise with:

- Your company size and resources

- Irish/EU regulations relevant to you

- Your specific market conditions

- Your risk tolerance

- Your team's capabilities

Generic advice is often wrong advice. Context matters.

Mistake #6: Over-Trusting the AI

The problem:

Just because the AI used contrastive prompting doesn't mean it's infallible. If the AI has wrong information or misunderstands your context, it'll confidently contrast two wrong answers.

The fix:

- Verify key facts and figures independently

- Run important decisions past human experts

- Use contrastive prompting to improve your thinking, not replace it

- When money or compliance is involved, always get professional review

Think of contrastive prompting as giving you a really thorough colleague's opinion – valuable, but not a substitute for expertise.

When NOT to Use Contrastive Prompting

Be smart about where you apply this technique:

Skip contrastive prompting for:

- Simple factual lookups: "What's the current Irish VAT rate?" doesn't need wrong answers.

- Creative content: If you're writing marketing copy, contrasting with "bad copy" doesn't usually help – you just want good examples.

- Time-sensitive emergencies: If you need a decision in 30 seconds, use simpler prompting.

- Low-stakes decisions: Coffee supplier choice probably doesn't warrant deep contrastive analysis.

- When you have clear documentation: If you have step-by-step SOPs, just follow them.

Use contrastive prompting for:

- High-stakes decisions (financial, legal, strategic)

- Complex multi-factor choices (hiring, vendor selection, architecture)

- Error-prone processes (pricing, compliance, contract terms)

- Learning scenarios (training staff on decision frameworks)

- Risk assessment (identifying failure modes)

- Quality control (reviewing important outputs)

FAQ: Your Questions Answered

Does contrastive prompting work with any AI model?

es. ChatGPT, Claude, Gemini, local LLMs – they all respond to contrastive prompting. The technique is model-agnostic. You might see slightly better results with newer, more capable models (GPT-5, Claude Sonnet 4.5), but even older models show improvement.

Start with whatever AI tool you're already using. No need to switch models to try this.

Won't this make responses slower and more expensive?

Slower: Marginally – maybe 1-2 seconds more processing. But you'll save far more time by avoiding revision cycles.

More expensive: Token usage increases by ~30-50%, but this is offset by:

- Fewer iterations needed (each retry costs tokens too)

- Better first-attempt accuracy (fewer wasted queries)

- Mistakes avoided (which cost far more than tokens)

For a typical high-stakes decision (€5k+ impact), spending an extra €0.02 on tokens to get better analysis is a no-brainer.

How do I know if the "incorrect approach" the AI shows is realistic?

Test it against your experience:

- Have you seen people make these mistakes?

- Do the failure modes sound plausible?

- Can you find real examples of these errors?

If the incorrect approach sounds absurd, refine your prompt to ask for realistic common mistakes, not silly ones.

Better prompt:

Show me the incorrect approach that "intelligent people actually use"

and why it seems right but fails.

This forces the AI to create realistic contrasts, not strawmen.

Can I use contrastive prompting for content creation?

It depends. For factual content (guides, how-tos, analysis), yes – you can contrast accurate vs misleading information to ensure quality.

For creative content (brand copy, stories, slogans), it's less useful. You're better off with few-shot examples of good content.

Exception: Use it for content strategy decisions: "Show me high-converting email approaches vs approaches that get ignored."

What if the AI's "correct approach" is actually wrong?

This happens if:

- Your input context was incomplete or wrong

- The AI misunderstood your requirements

- Your industry has specific constraints the AI doesn't know

Solution: Add more context and iterate:

You suggested [X] as the correct approach. However, in Ireland/our

industry/our situation, [Y constraint] applies. Redo the contrastive

analysis accounting for [Y].

Always validate AI recommendations against your actual business context.

Should I show clients the "incorrect approach" in proposals?

Generally no. Clients don't need to see all your reasoning process – they want clear recommendations.

Exception: When educating clients about risks they're considering:

"Some companies respond to this situation by [incorrect approach], but here's why that typically fails in the Irish market... Instead, we recommend [correct approach]."

This positions you as the expert who helps them avoid common pitfalls.

Can contrastive prompting help with Irish-specific regulations?

Absolutely, but with a caveat: Always verify regulatory information independently. AI models don't have real-time updates on Irish law changes.

Good use:

Contrast compliant vs non-compliant approaches to [Irish regulation]

in [scenario], explaining why the non-compliant approach violates

[specific law/guideline].

Then verify with your solicitor, accountant, or relevant professional body.

Use it to structure your thinking, not replace professional advice.