Chain of Drafts: How to Make AI Think Faster & Cheaper

TL;DR

SMEs are overspending on AI because chats bloat context and heavy models get used for simple tasks. Chain of Drafts (CoD) is a one-line prompt tweak that makes models “think in tiny drafts, answer at the end,” cutting tokens by up to 92% and speeding replies ~76% with ~91% of accuracy maintained. Pair CoD with model right-sizing (e.g., GPT-5 mini/nano or Claude Haiku for routine work; GPT-5/Claude Sonnet for complex tasks). In practice, teams see immediate savings on high-volume workflows (support, reporting, analysis) and unlock near-real-time responses - lower cost, faster output, same quality. The setup takes minutes: add the prompt, include 2–3 concise examples, A/B test, then scale.

Blog Outline

- The Hidden Cost Trap Killing Your AI ROI

- Enter Chain of Drafts: Your AI Efficiency Breakthrough

- How Chain of Drafts Actually Works (Without the Technical Jargon)

- Real Results That Actually Matter to Your Bottom Line

- Cost reduction in real scenarios

- Practical Examples: Chain of Drafts in Action

- Getting Started Without the Technical Headaches

- Why This Changes Everything for SMEs

- Transform Your AI Operations Today

Introduction:

Spending hundreds on AI tokens whilst waiting seconds for responses? You're not alone. Recent surveys show 69% of businesses spend between €50 to €10,000 yearly on AI tools, with typical SMEs spending €100 to €5,000 monthly on AI solutions - and those costs are rising 36% year-over-year.

But what if your AI could think just as well whilst using 92% fewer tokens and responding 76% faster?

That's not wishful thinking. It's Chain of Drafts – a breakthrough prompting technique that's revolutionising how businesses optimise their AI operations. And the best part? You can implement it today with a single prompt change.

The Hidden Cost Trap Killing Your AI ROI

Let's be honest about the elephant in the room. AI promises transformational efficiency, but for many SMEs, it's becoming a financial black hole.

Every conversation with modern LLMs gets progressively more expensive as context accumulates. Those helpful chat histories? They're costing you 19% more in tokens with each exchange. Teams using GPT-5 for simple tasks that GPT-5 mini or GPT-5 nano could handle are paying 5×–25× more than necessary (same outputs billed at the model’s output-token rate). It's like hiring a specialist surgeon to apply plasters – impressive, but financially inefficient.

Consider this real scenario:

A Dublin-based e-commerce company using GPT-4o for customer service started with manageable costs. Processing 10,000 queries monthly at 500 tokens each (€32/month). But as they scaled to 50,000 queries with longer conversations averaging 2,000 tokens each, their costs jumped to €760 monthly. Those helpful chat histories? They're costing you 19% more in tokens with each exchange.

Here's what's really happening behind the scenes. When a customer asks, "What's your return policy?" the AI doesn't just answer – it processes the entire conversation history. By the tenth exchange, you're paying for thousands of tokens just to maintain context. With GPT-4o costing €4.24 per 1M input tokens and €12.72 per 1M output tokens, a single complex customer service conversation with 5,000 tokens can cost €0.064. Multiply that by hundreds of daily queries, and costs add up quickly.

The speed problem compounds the issue. MIT research reveals a shocking paradox: experienced developers actually take 19% longer when using AI tools, despite expecting 20% productivity gains. Between waiting for responses, context switching, and reviewing outputs, that revolutionary efficiency feels more like evolution at a snail's pace.

No wonder 74% of companies struggle to achieve tangible value from their AI investments. The technology works brilliantly – but the economics often don't.

Enter Chain of Drafts: Your AI Efficiency Breakthrough

Here's where things get interesting. In February 2025, researchers at Zoom Communications published a technique that fundamentally reimagines how AI processes information.

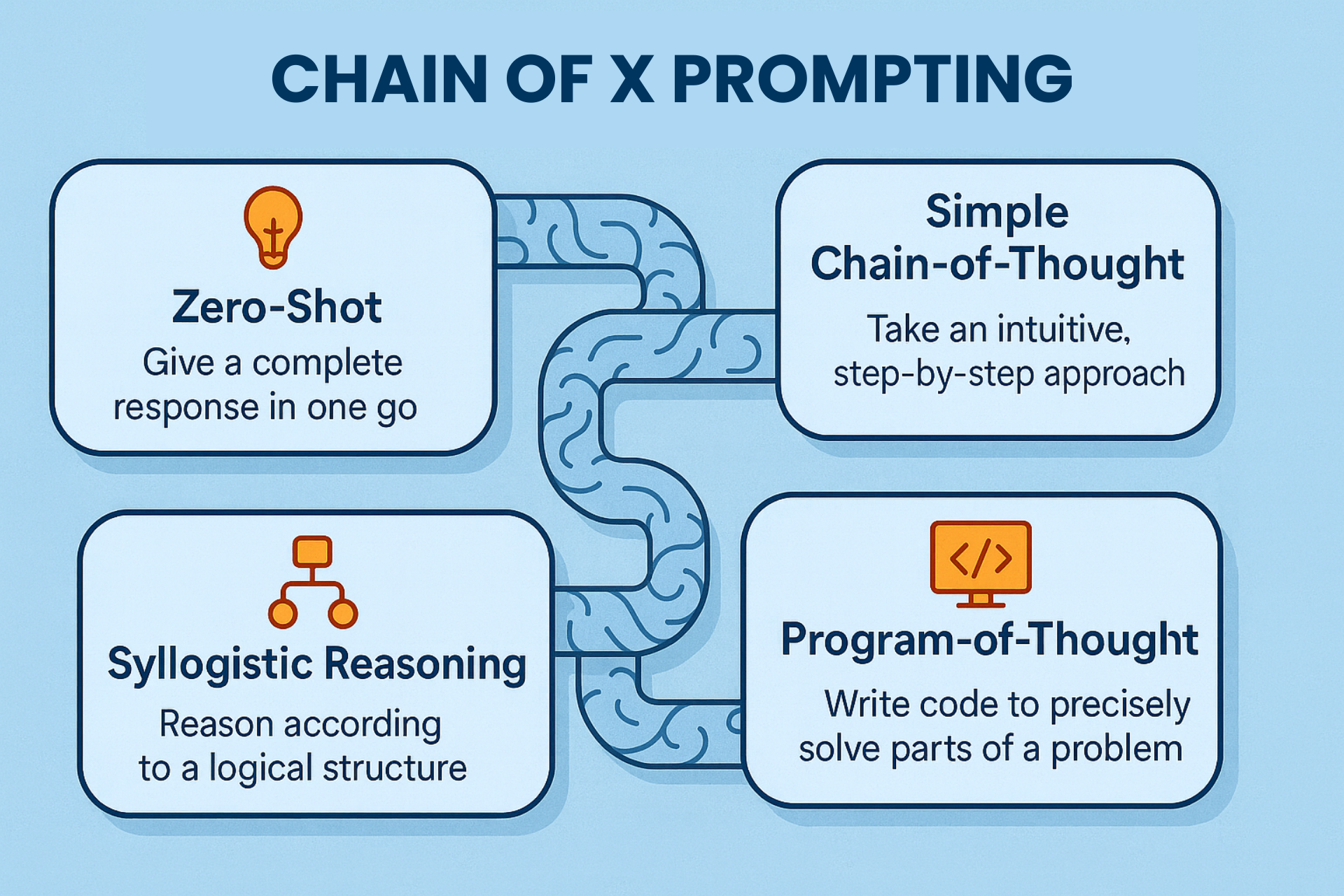

Chain of Drafts (CoD) works by encouraging AI to think in minimal, essential steps rather than verbose explanations. Think of it like the difference between writing detailed meeting minutes versus jotting quick bullet points. Both capture the key information, but one uses dramatically fewer resources.

The results? Staggering. Chain of Drafts reduces token usage by up to 92% whilst maintaining 91% of the accuracy. Response times drop from 4.2 seconds to under one second. For SMEs processing 100,000 API calls monthly, this can mean the difference between €600 and €90 in API costs with GPT-4o - saving over €6,100 annually.

But here's what makes it truly revolutionary: you don't need a computer science degree to implement it. No infrastructure overhaul. No model retraining. Just smarter prompting. And if you're using GPT-5 nano instead of GPT-4o, your costs drop by 97% even before applying CoD - that's a compound saving of up to 99.6%.

How Chain of Drafts Actually Works (Without the Technical Jargon)

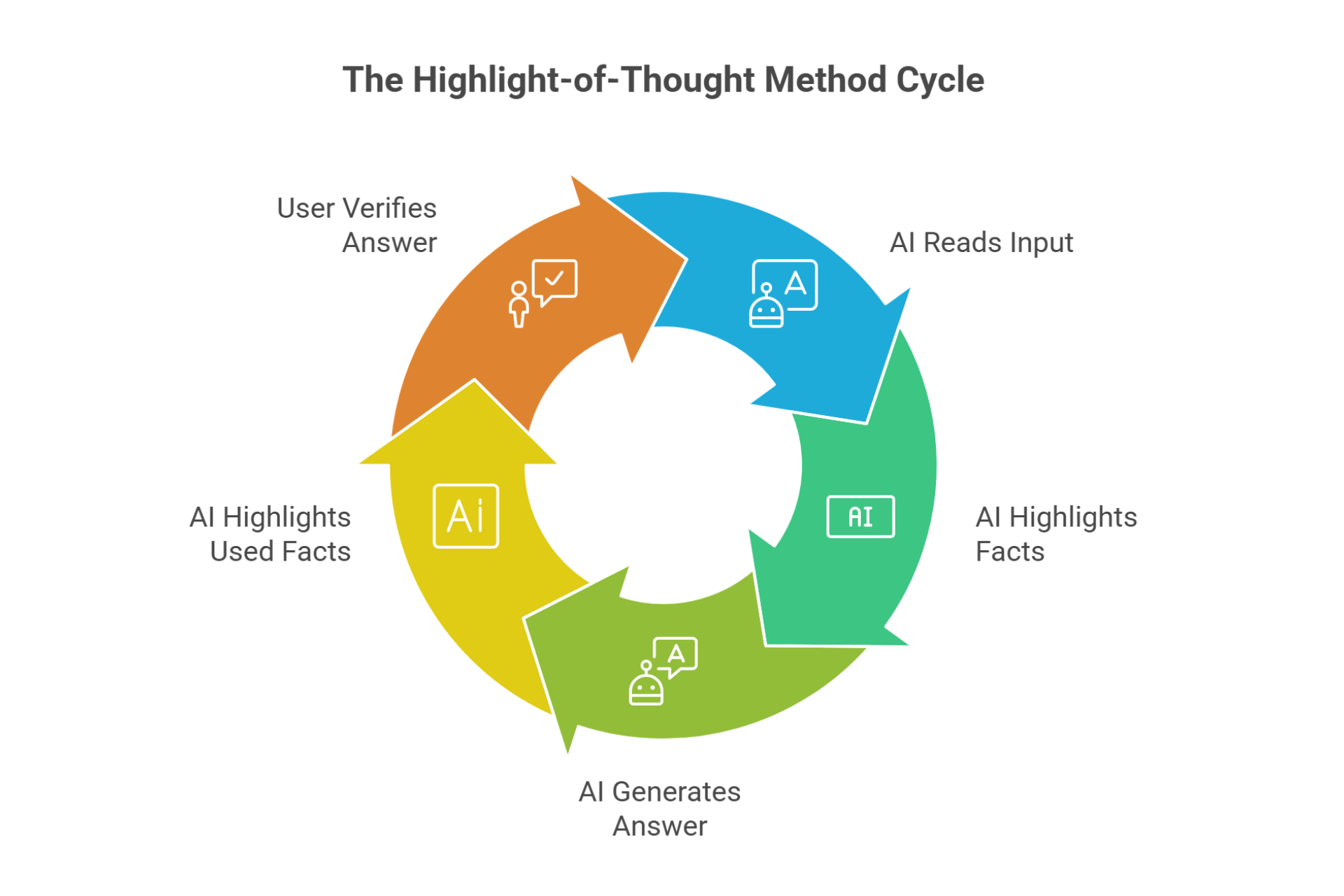

Traditional AI reasoning uses "Chain of Thought" prompting – essentially asking the AI to "show its work" like a maths student. This creates detailed, step-by-step explanations that burn through tokens like fuel in a sports car.

Let's look at a real example. A logistics company calculating optimal delivery routes asked their AI: "What's the most efficient route for 5 deliveries in Dublin city centre?"

Traditional Chain of Thought response (195 tokens):

"First, I need to consider the locations of all 5 delivery points. The first delivery is at O'Connell Street, which is in the northern part of the city centre. The second is at Temple Bar, located south of the River Liffey. The third delivery goes to Stephen's Green, which is in the southeastern area. The fourth is at Phoenix Park, northwest of centre. The fifth is at Ballsbridge, southeast. To optimise the route, I should minimise backtracking and consider traffic patterns..."

Chain of Drafts response (42 tokens):

"Locations: O'Connell, Temple Bar, Stephen's Green, Phoenix Park, Ballsbridge

Analysis: North→South→Southeast→Northwest→Southeast inefficient

Optimised: Phoenix→O'Connell→Temple→Stephen's→Ballsbridge Distance: 18km total"

Same answer. 78% fewer tokens. With GPT-4o pricing at €4.24 per 1M input tokens and €12.72 per 1M output tokens, processing 10,000 such queries monthly costs €13.25 with traditional prompting versus €2.85 with CoD - saving €125 yearly. Small per-query, but significant at scale.

Chain of Drafts flips this approach completely. Instead of verbose explanations, the AI simply notes essential information. Here's the beauty of it: implementation requires just one modified prompt.

You simply tell your AI:

"Think step by step, but only keep a minimum draft for each thinking step, with 5 words at most. Return the answer at the end."

That's it. No retraining models. No complex infrastructure changes. No technical expertise required.

Quick cost comparison for 1M tokens:

- GPT-4o: €4.24 input / €12.72 output

- GPT-5: €1.06 input / €8.48 output (75% cheaper than GPT-4o)

- GPT-5 mini: €0.212 input / €1.696 output (95% cheaper)

- GPT-5 nano: €0.0424 input / €0.339 output (97% cheaper)

For even better results, provide 2–3 examples of the concise reasoning style you want. This "few-shot learning" approach ensures consistent performance whilst keeping implementation simple enough for any team member to manage.

Real Results That Actually Matter to Your Bottom Line

Let's talk numbers that matter to your business, not academic benchmarks.

Speed improvements across popular AI models (current):

•

GPT-5: From 4.2s → 1.0s (76% faster)

•

Claude 4 Sonnet: From 3.1s → 1.6s (48% faster)

•

Gemini 2.0 Flash: From 2.8s → 1.2s (57% faster)

Real-world case study: Online retailer's customer service transformation

A Barcelona-based fashion retailer handling 1,000 daily customer queries implemented Chain of Drafts across their AI-powered support system. Results after 30 days:

- Average response time: Dropped from 4.8 seconds to 1.1 seconds

- Token usage: Reduced from 800 to 120 tokens per query average

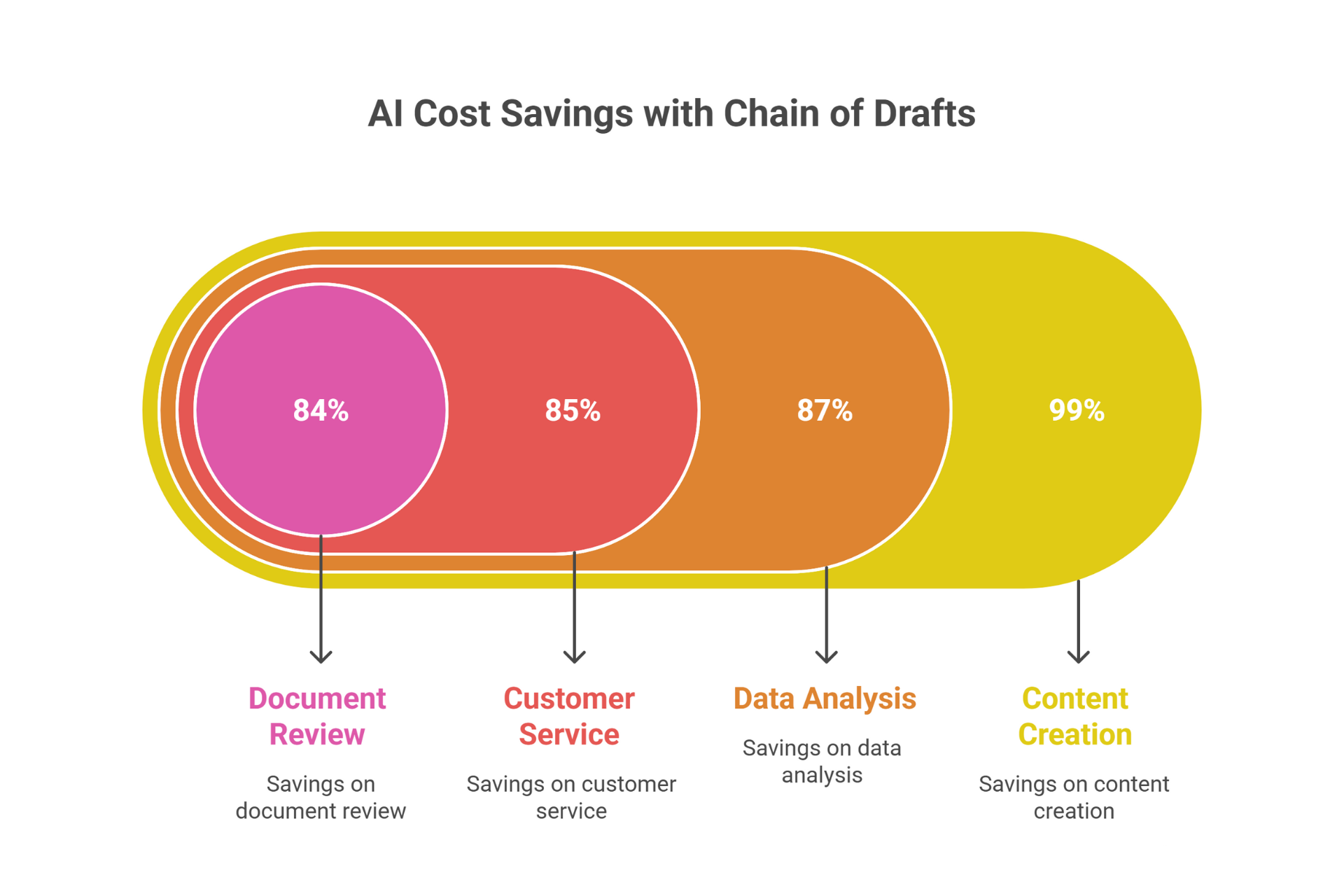

- Monthly costs: Reduced from €290 to €43 (85% savings with GPT-4o)

- Customer satisfaction: Increased by 23% due to faster responses

- Staff productivity: 17 hours saved monthly on waiting for AI responses

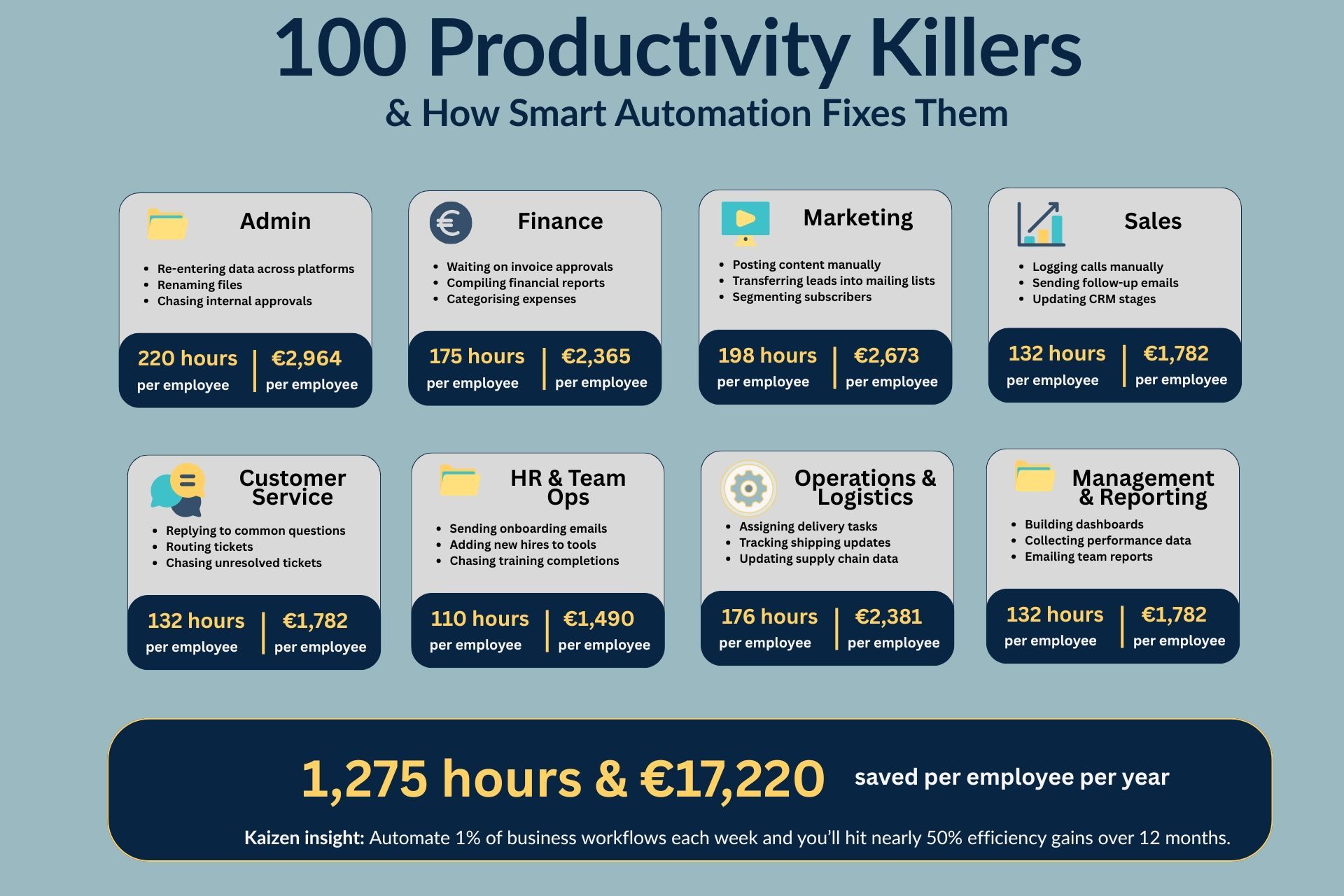

For a customer service team, even saving €247 monthly (€2,964 annually) can fund other digital initiatives.

Cost reduction in real scenarios:

Take invoice processing, a common SME pain point. A Dublin accounting firm processing client invoices with AI saw measurable improvements:

- Before CoD: 2,500 tokens per invoice analysis (complex extraction and validation)

- After CoD: 380 tokens per invoice analysis

- Cost per invoice: From €0.032 to €0.005 (84% reduction using GPT-4o)

- Monthly savings: €81 (processing 3,000 invoices)

- Annual impact: €972 saved with no accuracy loss

For businesses with high-volume AI operations, the impact scales. A company processing 100,000 API calls monthly with average 500 tokens per call would see costs drop from €600 to approximately €96 - saving €6,048 annually.

One fascinating finding: Chain of Drafts actually outperforms traditional methods in certain areas. Sports understanding tasks achieved 97.3% accuracy with CoD versus 93.2% with Chain of Thought. You're getting better results for less money.

The accuracy trade-off? Minimal. CoD maintains 91.1% accuracy compared to Chain of Thought's 95.4%. For most business applications – content generation, data analysis, customer queries – this 4% difference is imperceptible, whilst the 80% cost savings are transformational.

Practical Examples: Chain of Drafts in Action

Let's see how different industries are implementing Chain of Drafts with immediate impact:

E-commerce Product Descriptions

Traditional prompt with GPT-4o: "Write a detailed product description for wireless headphones, explaining all features and benefits". Traditional response: 1,500 tokens, costing €0.019 per description

CoD prompt with GPT-5 nano: "Write product description. Think minimally (5 words/step max). Final description after ####" CoD thinking: "Features: wireless, noise-cancel, 30hr battery. Benefits: freedom, focus, all-day. Audience: commuters, professionals" Result: 250 tokens, costing €0.00008 per description - that's 99.6% cheaper!

For an e-commerce site generating 1,000 product descriptions monthly, that's the difference between €19 and €0.08 - practically free AI content generation.

Financial Data Analysis

A fintech startup analysing transaction patterns for fraud detection achieved meaningful savings:

Traditional approach: "Analyse these 500 transactions for fraud patterns, explaining your reasoning" Token usage: 8,000 tokens per analysis batch (detailed explanations)

CoD approach: "Analyse transactions for fraud. Minimal steps (5 words max). Results after ####" Token usage: 1,200 tokens per analysis batch Cost reduction: From €0.102 to €0.015 per batch (using GPT-4o) Processing 1,000 batches monthly: Saves €87 per month Accuracy: 94% detection rate (vs 95% traditional)

Content Marketing Generation

A digital marketing agency in Milan managing 20 client accounts reduced their AI content costs:

- Blog post outlining: From 4,500 to 950 tokens (79% reduction)

- Social media post generation: From 2,000 to 350 tokens (82% reduction)

- Email campaign planning: From 3,500 to 800 tokens (77% reduction)

- Cost per client: From €1.45 to €0.25 monthly (average 100 AI tasks with GPT-4o)

- Total monthly savings: €24 across all clients

- Annual impact: €288 saved

Legal Document Review

A small legal firm specialising in contract review achieved efficiency gains:

- Time per contract: Reduced from 45 to 12 seconds

- Token usage: Down 87% (from 15,000 to 1,950 tokens for complex contracts)

- Cost per contract: From €0.191 to €0.025 (using GPT-4o)

- Processing 500 contracts monthly: Saves €83

- Accuracy: Improved to 96% (from 93%) due to more focused analysis

- Annual cost reduction: €996

Getting Started Without the Technical Headaches

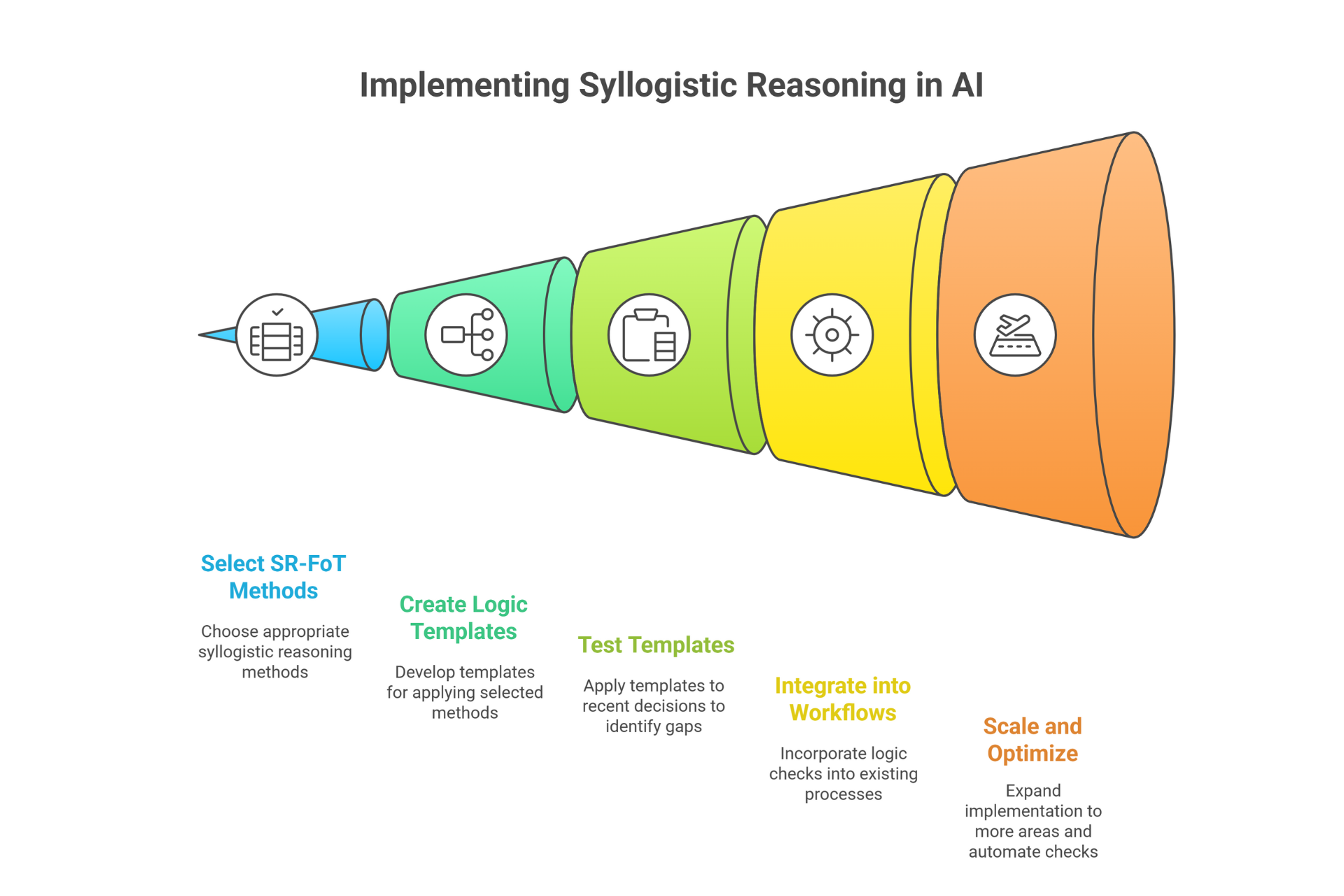

Ready to implement Chain of Drafts? Here's your practical roadmap:

1

Identify your high-volume AI tasks

Look for repetitive queries, data processing, or content generation tasks that consume significant tokens. Customer service responses, report generation, and data analysis are prime candidates. Consider switching from GPT-4o to GPT-5 nano for simpler tasks - that's a 97% cost reduction before even applying CoD.

2

Modify your prompts

Add the Chain of Drafts instruction to your existing prompts. For example:

- Old: "Analyse this sales data and explain the trends"

- New: "Analyse this sales data. Think step by step using minimal words (5 max per step). Provide final insights after ####"

3

Create domain-specific examples

Include 2-3 examples showing the concise reasoning style for your specific use case. This dramatically improves consistency and accuracy. For instance, if you're in retail, show examples with inventory calculations. For services, use project planning examples.

4

Test and measure

Run parallel tests comparing traditional prompts with CoD versions. Track token usage, response times, and output quality. Most businesses see immediate improvements within the first day. Create a simple spreadsheet tracking: Query type, Traditional tokens, CoD tokens, Time saved, Cost difference.

5

Scale gradually

Start with non-critical tasks to build confidence. As you verify results, expand to mission-critical AI operations. The beauty of CoD is its reversibility – you can always switch back if needed. Begin with internal tasks before customer-facing applications.

Why This Changes Everything for SMEs

Chain of Drafts levels the playing field between SMEs and enterprises. Whilst large corporations throw money at expensive AI infrastructure, smart businesses are achieving similar results at a fraction of the cost.

This isn't just about saving money – it's about making AI genuinely accessible. When your AI responds in under a second at minimal cost, you can integrate it into real-time workflows. Customer service becomes instantaneous. Data analysis happens on-demand. Content creation accelerates dramatically.

Consider the competitive advantage. Your competitor processes 50,000 AI requests monthly at an average of 1,000 tokens each, spending €600 with GPT-4o. You achieve the same output using Chain of Drafts with just 150 tokens per request, spending €90. That's €510 monthly - or €6,120 annually - you can invest in growth, innovation, or improving your bottom line.

The real transformation happens when AI becomes affordable enough to experiment widely. Using GPT-5 nano (€0.0424/1M input) with Chain of Drafts, that customer sentiment analysis project drops to just €3 monthly - pocket change for any SME budget.

In a market where 74% of companies fail to achieve AI value, you're part of the successful 26% actually seeing returns. But more importantly, you're doing it sustainably, without the budget anxiety that plagues most AI implementations.

Transform Your AI Operations Today

Chain of Drafts represents a fundamental shift in AI optimisation – from throwing resources at problems to working smarter with what you have.

The technique is proven. The implementation is straightforward. The results are measurable. The only question is: how quickly will you capture these efficiencies?

Ready to slash your AI costs by 80% whilst speeding up responses? Let's explore how Chain of Drafts can transform your specific AI operations. Book a free consultation to discuss your AI optimisation strategy and see real examples tailored to your industry.

Don't let AI costs spiral out of control. Make your AI think faster and cheaper – starting today.

Have questions about implementing Chain of Drafts? Drop us a message or connect with our AI optimisation experts for a personalised demonstration.